Dataset.

Dataset file structure

HCL_Full

|── 09-23-13-44-53-1

| |── bin (LiDAR)

| | |── 1663912046.036171264.bin

| | |── 1663912046.135965440.bin

| | |── ...

| |── imu_csv (IMU)

| | |── 1663912046015329873.csv

| | |── 1663912046025090565.csv

| | |── ...

| |── img_blur (Camera)

| |── cam1

| | |── 1663912046.036171264.jpg

| | |── 1663912046.135965440.jpg

| | |── ...

| |── cam2

| |── cam6

|── 09-23-13-44-53-2

|── ...

Specification

- Point clouds are stored in binary format (bin). Use

np.fromfile(file_path, dtype=np.float32).reshape(-1, 5) to load the file. Columns 0-4 represent x, y, z, reflectivity, and timestamp (t) respectively.

- The image is downsampled to 10Hz and temporally aligned with LiDAR data. After alignment, the image is renamed to correspond to the LiDAR file's name. The original image (32Hz) will be released soon.

- To preserve high-density IMU data, the IMU data isn't downsampled or aligned.

Annotation structure

09-23-13-44-53-1.json

{

"data": "09-23-13-44-53-1",//corresponding data folder

"frames_number": 44,//frame number

"frame": [

{

"frameId": 0,//frame id

"timestamp": 1663912047.0359857,//timestamp

"pc_name": "09-23-13-44-53-1/bin/1663912047.035985664.bin",//point cloud path

"instance_number": 5,//instance number

"instance": [

{

"id": "a5f9185a-5719-4414-9ce5-1ba9316d7050",//unique uuid

"number": 1,//globel id

"category": "person",//category

"action": "moving boxes,walking",//action

"pointCount": 358,//number of point

"seg_points": [

119855,

119856,

...

]//index for points

"occlusion": 0,//occlusion level(0-1)

"position": {

"x": 8.642838478088379,

"y": -1.170599341392517,

"z": -0.4981747269630432

},//bbox position

"rotation": 1.1941385296061557,//bbox rotation(Yaw)

"boundingbox3d": {

"x": 0.7874413728713989,

"y": 0.4814544916152954,

"z": 1.5814100503921509

}//bbox dimensions

},

{...},

...

]

},

{...},

...

]

}

Specification

- Annotation files are named correspondingly to the names in the dataset.

- Note: The 'instance' 'id' only applies to the JSON it originates from.

Split file structure

HCL_split.json

{

"train": [

"10-01-18-42-05-2.json",

"10-01-18-55-50-2.json",

...

],

"test": [

"10-03-16-35-25-1.json",

"10-03-16-35-25-2.json",

...

]

}

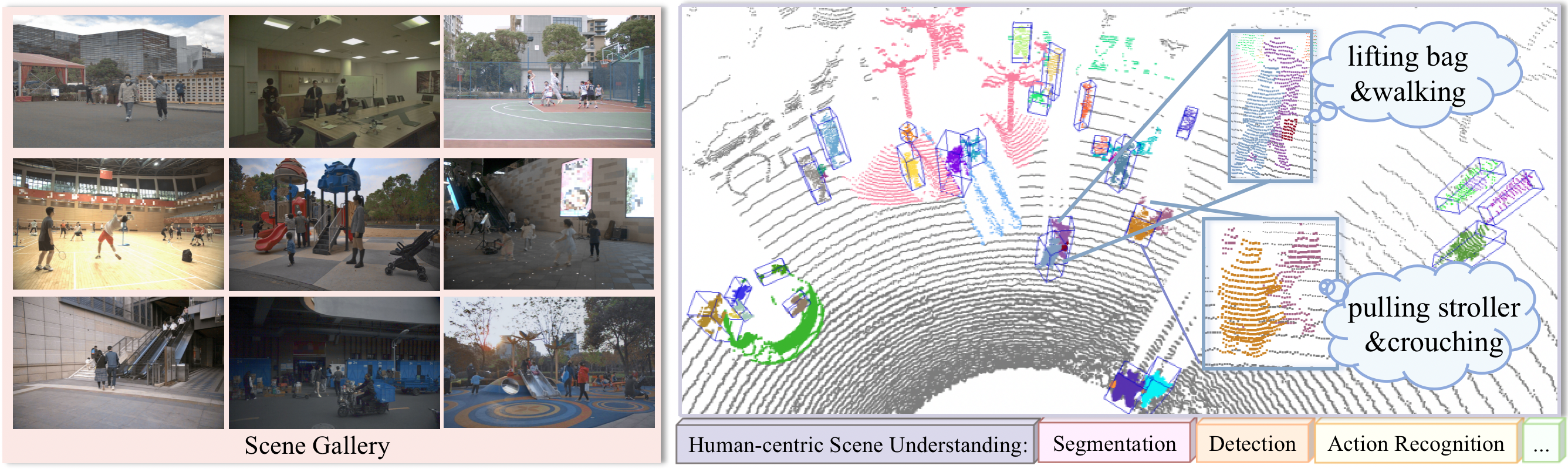

Figure 1. The left shows several scenes captured in HuCenLife, which covers diverse human-centric daily-life

scenarios. The right demonstrates rich annotations of HuCenLife, which can benefit many tasks for 3D scene

understanding .

Figure 1. The left shows several scenes captured in HuCenLife, which covers diverse human-centric daily-life

scenarios. The right demonstrates rich annotations of HuCenLife, which can benefit many tasks for 3D scene

understanding .