LiDAR-based human motion capture has garnered significant interest in recent years for its practicability in large-scale and unconstrained environments. However, most methods rely on cleanly segmented human point clouds as input, the accuracy and smoothness of their motion results are compromised when faced with noisy data, rendering them unsuitable for practical applications.

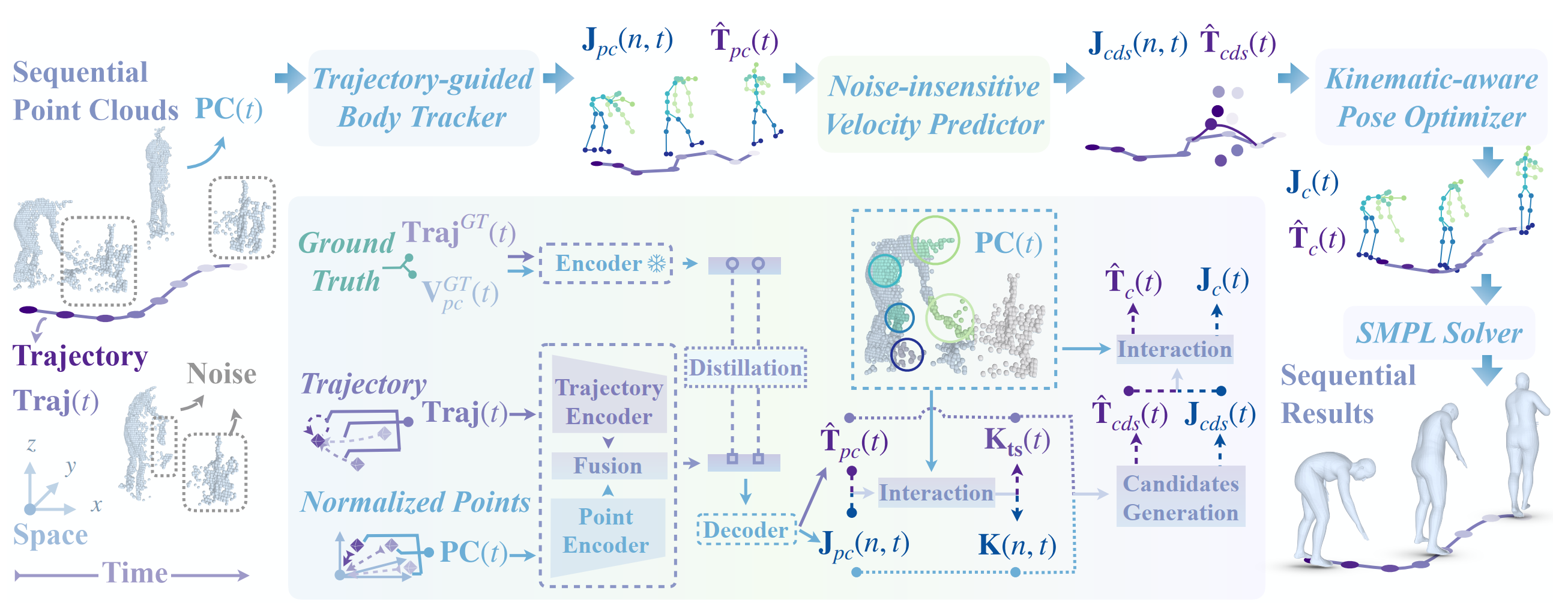

To address these limitations and enhance the robustness and precision of motion capture with noise interference, we introduce LiveHPS++, an innovative and effective solution based on a single LiDAR system. Benefiting from three meticulously designed modules, our method can learn dynamic and kinematic features from human movements, and further enable the precise capture of coherent human motions in open settings, making it highly applicable to real-world scenarios.

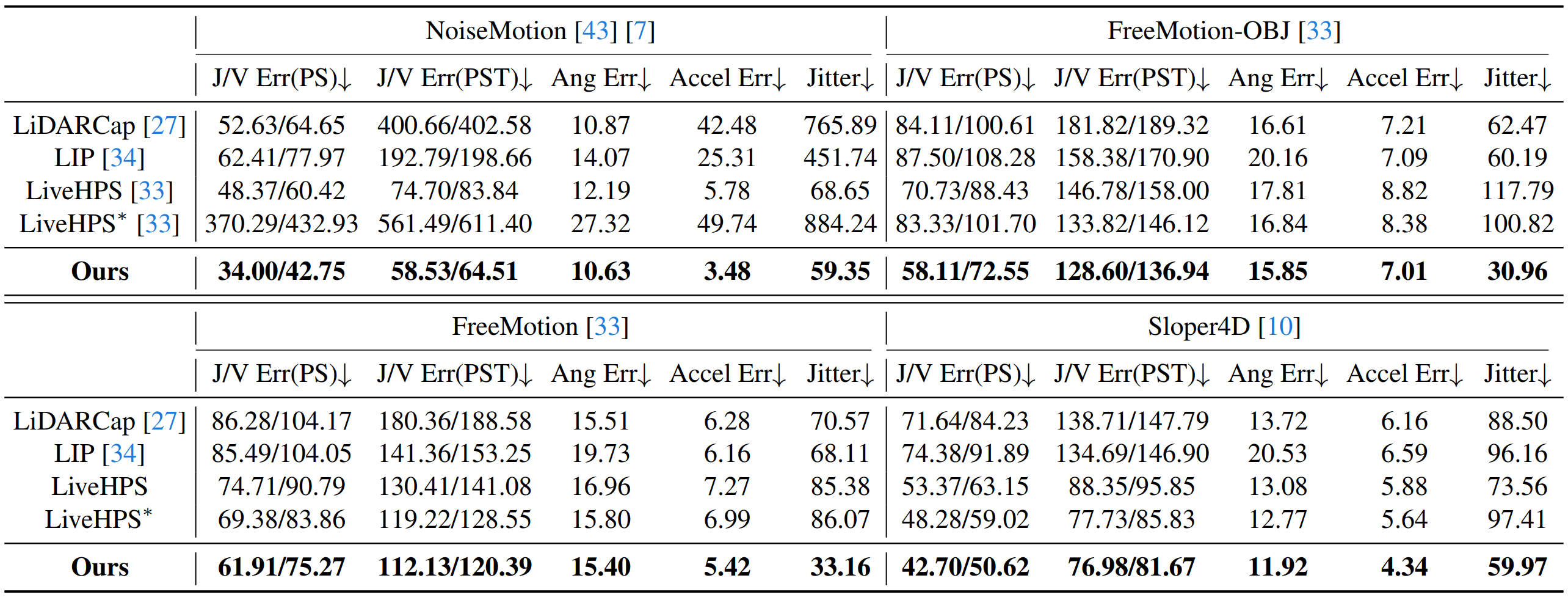

Through extensive experiments, LiveHPS++ has proven to significantly surpass existing state-of-the-art methods across various datasets, establishing a new benchmark in the field.

Figure 1. Visualization of the motion capture performance of LiveHPS++ in a real-time captured scenario with complex human-object interaction. The left exhibits images for reference, the middle shows the noised point clouds (top) and our corresponding mesh model results (bottom). We zoom in some cases on the right for clearer demonstration, where point clouds are drawn in white.

Figure 1. Visualization of the motion capture performance of LiveHPS++ in a real-time captured scenario with complex human-object interaction. The left exhibits images for reference, the middle shows the noised point clouds (top) and our corresponding mesh model results (bottom). We zoom in some cases on the right for clearer demonstration, where point clouds are drawn in white.