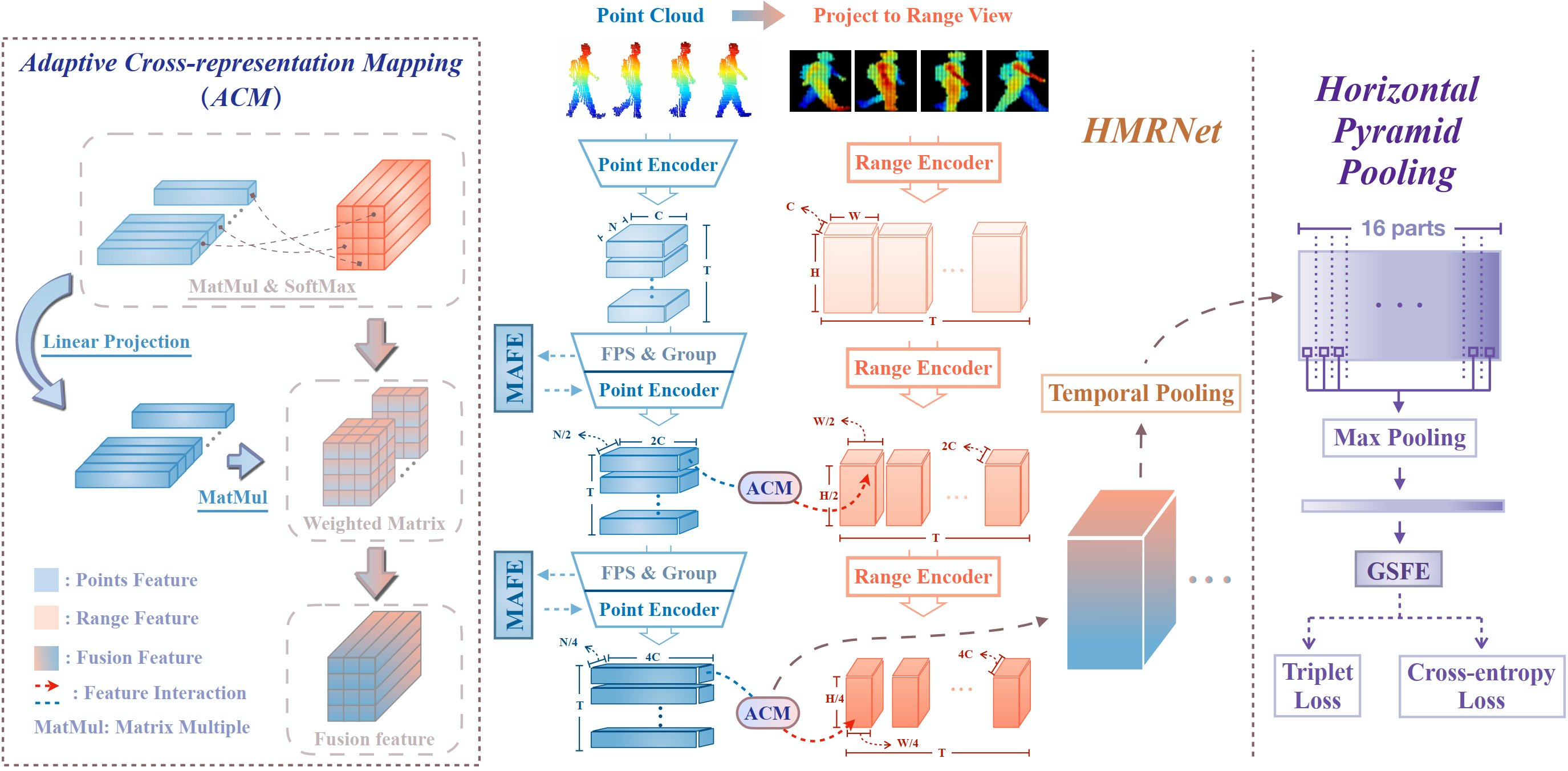

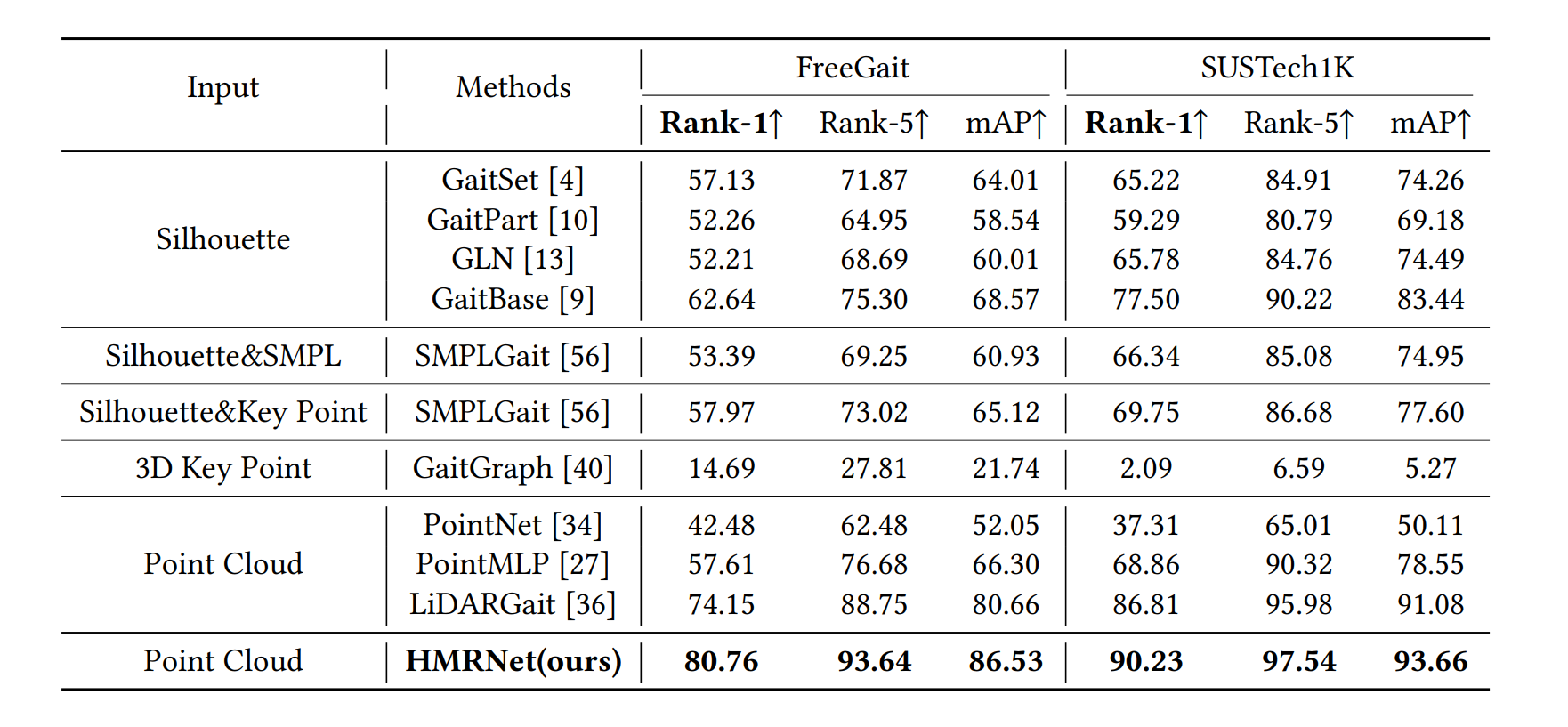

Human gait recognition is crucial in multimedia, enabling identification through walking patterns without direct interaction, enhancing the integration across various media forms in real-world applications like smart homes, healthcare and non-intrusive security. LiDAR's ability to capture depth makes it pivotal for robotic perception and holds promise for real-world gait recognition. In this paper, based on a single LiDAR, we present the Hierarchical Multi-representation Feature Interaction Network (HMRNet) for robust gait recognition. Prevailing LiDAR-based gait datasets primarily derive from controlled settings with predefined trajectory, remaining a gap with real-world scenarios. To facilitate LiDAR-based gait recognition research, we introduce FreeGait, a comprehensive gait dataset from large-scale, unconstrained settings, enriched with multi-modal and varied 2D/3D data. Notably, our approach achieves state-of-the-art performance on prior dataset (SUSTech1K) and on FreeGait.

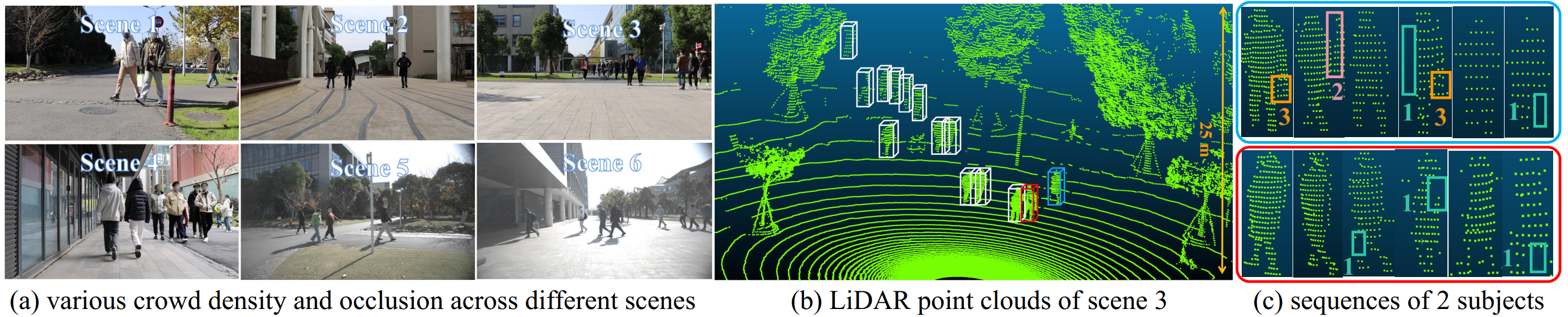

We propose FreeGait, a new LiDAR-based in-the-wild gait dataset under various crowd density and occlusion across different real-life scenes.

FreeGait is captured in diverse large-scale real-life scenarios with free trajectory, resulting in various challenges such as 1. occlusions, 2. noise from crowd, 3. noise from carrying objects and etc., as shown in the right part.

We propose FreeGait, a new LiDAR-based in-the-wild gait dataset under various crowd density and occlusion across different real-life scenes.

FreeGait is captured in diverse large-scale real-life scenarios with free trajectory, resulting in various challenges such as 1. occlusions, 2. noise from crowd, 3. noise from carrying objects and etc., as shown in the right part.